ROS Communications

&

What This is Not About

Ros is quite extensive...we will not cover:

- Build System (Catkin & Rosdep)

- Software Packaging (Bloom)

- Build Farm (Open/Closed)

- Capabilities (Drivers, Algorithms, ...)

- Tools (Logging, Introspecting, Viz, ...)

- Community (Documentation, Workshops, ...)

Today we focus on the

Why the Plumbing?

- It's

core - Undergoing significant redevelopment

- Other areas are relatively static

Contents

1.0

- History

- State

- Technicals

- Extensions

- Shortcomings

2.0

- Motivation

- State

- Roadmap

- Building on DDS

- Legacy Support

1.0 - History

- 2007 : Stanford (STAIR, PR1)

- 2007 : Topology : Master/Node/Param Server concepts fixed

- 2007 : Transports : reliable (TCP)/unreliable (UDP) types defined

- 2007 : Messaging Patterns : publishers, subscribers & services

- 2007 :

Implementation : Posix C++ - 2008 : Moved to Willow Garage

- 2008 : Reference Robot : PR2

- 2008 : Implementation : Lisp

- 2009 : Implementation : Python

- 2009 : Implementation : ARM support for c++

- 2009 : Messaging Patterns : actions (c++/python)

- 2010 : Nodelets (IntraProcess, c++ only)

- 2010 :

1.0 Release - 2011 : Reference Robot : Turtlebot

- 2011 : Implementation : Windows support for c++

- 2011 : Implementation : Java/Android

- 2012 :

ROS 2.0 movement started - 2014 : Transferred to OSRF

Current State

- Stable

- No new development (since 2011)

- No new development planned

- Various community workarounds introduced (since 2011)

Mostly everyone

1.0 - Topology

The glue for making this work is via xmlrpc servers at each component.

1.0 - Topology

TODO : image showing 1) master/param, 2) nodes, 3) registrations, 4) connection http://wiki.ros.org/Master

1.0 - Transports

Conceptually organised as

Other options (e.g. shared memory) were explored, but discarded. Consensus was: 1) fast -> use c++ intraprocess connections, 2) convenience -> use python tcpros connections.

1.0 - Messaging

Headers, modules, artifacts

Support for

A limited set of

1.0 - Parameter Server

Primarily used as for static, pre-launch configuration of nodes.

1.0 - Language Implementations

1.0 - How it Got Here

- Single robot

- Massive computational resources on board

- Real time requirements met in a custom manner

- Excellent network connectivity

- Applications in research

- Maximum flexibility, nothing prescribed or proscribed

- e.g., “we don’t wrap your main()”.

1.0 - Shortcomings

Centralised master - Autonomous robots each need their own master

Maintenance - Not a large enough developer or user base

Impractical wireless communications - Missing unreliable implementations

- Unreliable transport configuration (hints) minimal.

- No multicast support - tf trees and point clouds are expensive

Node API only exposed at runtime - Requires expert knowledge to organise a system launch

- Cannot validate/manipulate components on launching

Cannot manage the computational graph - No lifecycle management

- Cannot direct the computation

- Cannot dynamically realign connections

Heavy - too heavy for embedded (< armv6)

Static parameter serving - awkward workarounds to provide dynamic parameterisations

Not realtime

2.0 - Motivation

Support

- Teams of robots

- Small Embedded

- Non-ideal networks

- Connect to relevant communities

- Support patterns for building and structuring systems

Offload to

2.0 - Current State

Decisions No central master - discovery node by nodeOpen Licensing - no closed dependenciesROS Messaging Types - Continue usage of external ROS message type formats (it worked)

- Check cost/apply converters to satisfy any middleware requirements

Implement on DDS - Offload expensive middleware development

- Connect with major players in similar industries

Backwards compatibility - support ways of talking with ROS 1.0

Development Design - messaging/library/stack layoutSimple examples - validated working pubsub/services, ...Unit testing - across linux, arm, windows.

2.0 - Roadmap

Working prototype with fundamental components available for human consumption ~end of summer 2015.

Quite likely a couple of years before fully featured.

2.0 - DDS

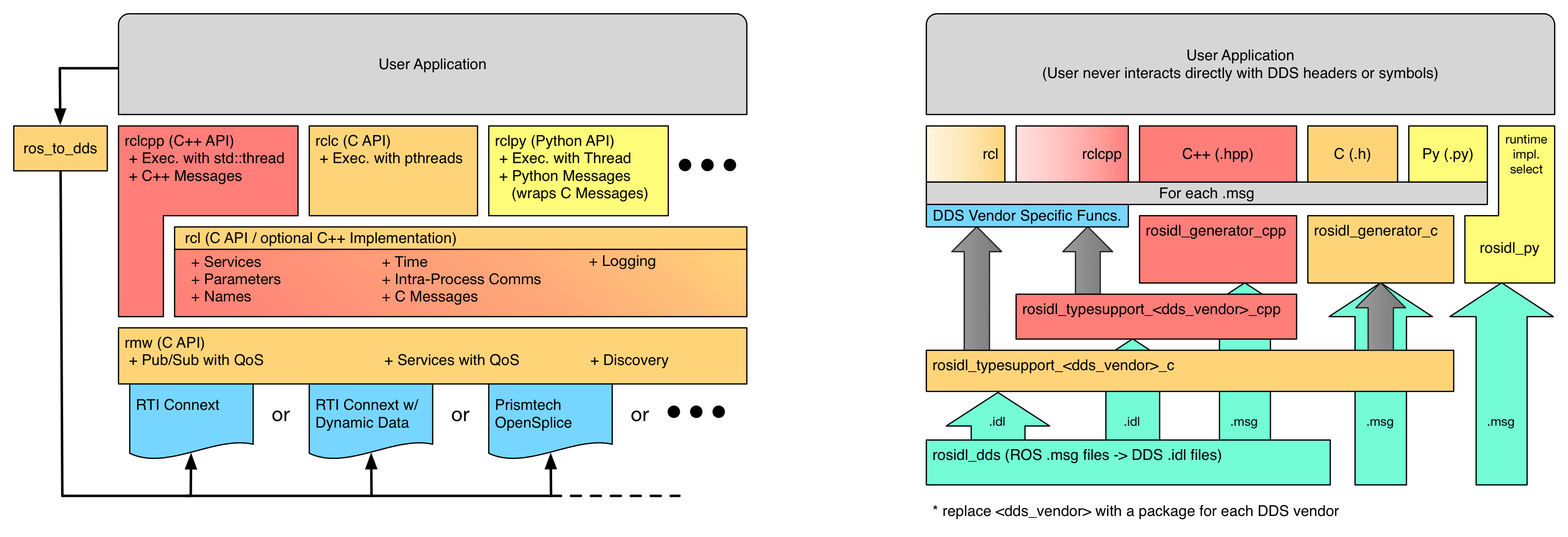

2.0 - ROS Client Library Stack

2.0 - Discovery & Segmentation

Discovery - Nodes use Multicast/Broadcast UDP to find each other

- Implemented with the SDP/SPDP/SEDP protocols from DDS.

Domains - DDS Concept

- Connect to each other on separate multicast domains.

- Replaces the old concept of a ROS Master

Partitions - DDS Concept

- Abstract partitioning for nodes on a single domain.

- Like partitions on a hard drive, but more flexible.

2.0 - Transports

- Focus on

reliable andunreliable - Build up

profiles for connection configurations and apply it to configure all unreliable connections on a system. - This is important for settings that can be configured by a system integrator

- e.g. Standard Wireless Lab Profile

- e.g. Outdoors Wireless.

- Differentiate for

transport hints that must be applied programmatically - This is important for settings that effect how you write code

- Save backlogs to send when a wireless connection gets reestablished.

- Make use of

multicast when available (when DDS layer supports it).

2.0 - Names

- Runtime Renaming

- Aliasing

- e.g. alias

/foo to/foo_compressed with lz compression

Useful for managing and building up complex systems.

2.0 - Languages

2.0 - Managing a Runtime System

Legacy Support

Goal is to have a smooth transition - the fundamental ROS API (ROS messages) will remain the same while the client libraries will have means of connecting/talking to each other.